Featured Tools:

Microsoft

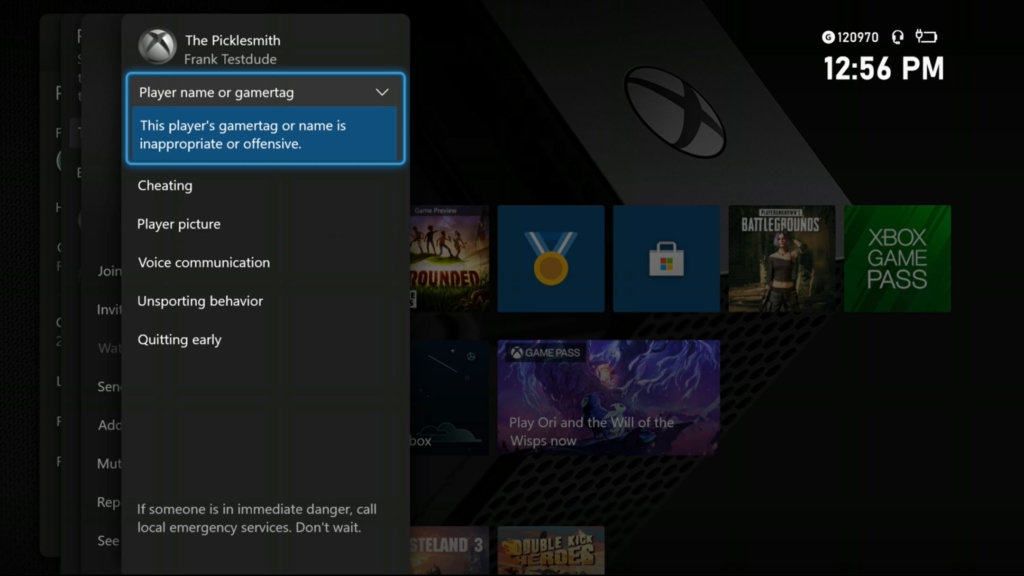

Xbox players are protected from unwanted content and conduct through a combination of technical means—including AI-based tools and automated content filters—and highly trained professional staff who review user reports and investigate allegations of misconduct.

The Xbox Moderation team serves as the company’s critical first responders. This team reviews user reports and items flagged by automated technology, often in real-time, and takes action as needed to protect player experiences. Microsoft also leverages community managers and Xbox Ambassadors, encouraging them to report any issues they observe in individual Xbox game communities.

The Xbox Investigation team looks more deeply into issues when more serious allegations are made. It also engages real-world help for users in crisis through third-party partnerships. This team is also responsible for appeals, where users who have received enforcement actions can challenge the determination and have their cases reinvestigated.

As Microsoft continues to invest in artificial intelligence (AI) and automation for content moderation, the goal is not only efficiency, but also to reduce harm to its human-staffed teams by reducing their repetitive exposure to harmful content. Despite advances in AI and automation, there likely will continue to be an ongoing need for skilled human oversight, so Microsoft supports its safety teams with care plans and wellness and resiliency training to ensure it is protecting those who protect players.